How smaller, more efficient models are changing the game

For years, the narrative around artificial intelligence development has followed a predictable pattern: bitter is better. Larger models trained on more data using more computational resources were seen as the only path forward. This approach has led to remarkable achievements but also created significant barriers to entry for smaller organizations wanting to leverage AI for their specific needs.

However, a significant shift is underway, challenging this established paradigm. The advent of Deepseek has burst the perception—or perhaps misconception—that the only way to make an AI model better is through deploying more power, more GPUs, and more data. This represents a hopeful trend for businesses and developers who have long awaited more sophisticated yet smaller models that could be run locally to address their unique requirements.

The impact is already being felt across the technology ecosystem. Having sold hundreds of thousands of GPUs through multiple cryptocurrency cycles, our company is witnessing unprecedented market dynamics that directly tie back to this architectural breakthrough. We’re currently experiencing the most unusual GPU market we’ve ever seen—with prices for even older generation cards increasing after the holidays – without crypto mooning.

Beyond One-Size-Fits-All AI

One-size-fits-all models often fail to meet the specific needs of individual businesses. Each organization has unique data, workflows, and challenges that require tailored solutions. Yet, the prospect of training and fine-tuning a model for each use case has remained prohibitively expensive, placing advanced AI capabilities out of reach for all but the largest enterprises.

This is where Deepseek’s innovative approach comes into play. By fundamentally rethinking how models are trained and structured, Deepseek has dramatically reduced the cost of creating powerful AI systems. The key insight lies in their method of leaving much of the computational work to the inference stage, which can be performed on a single GPU when working with appropriately sparse models.

Consumer Hardware Running Advanced AI

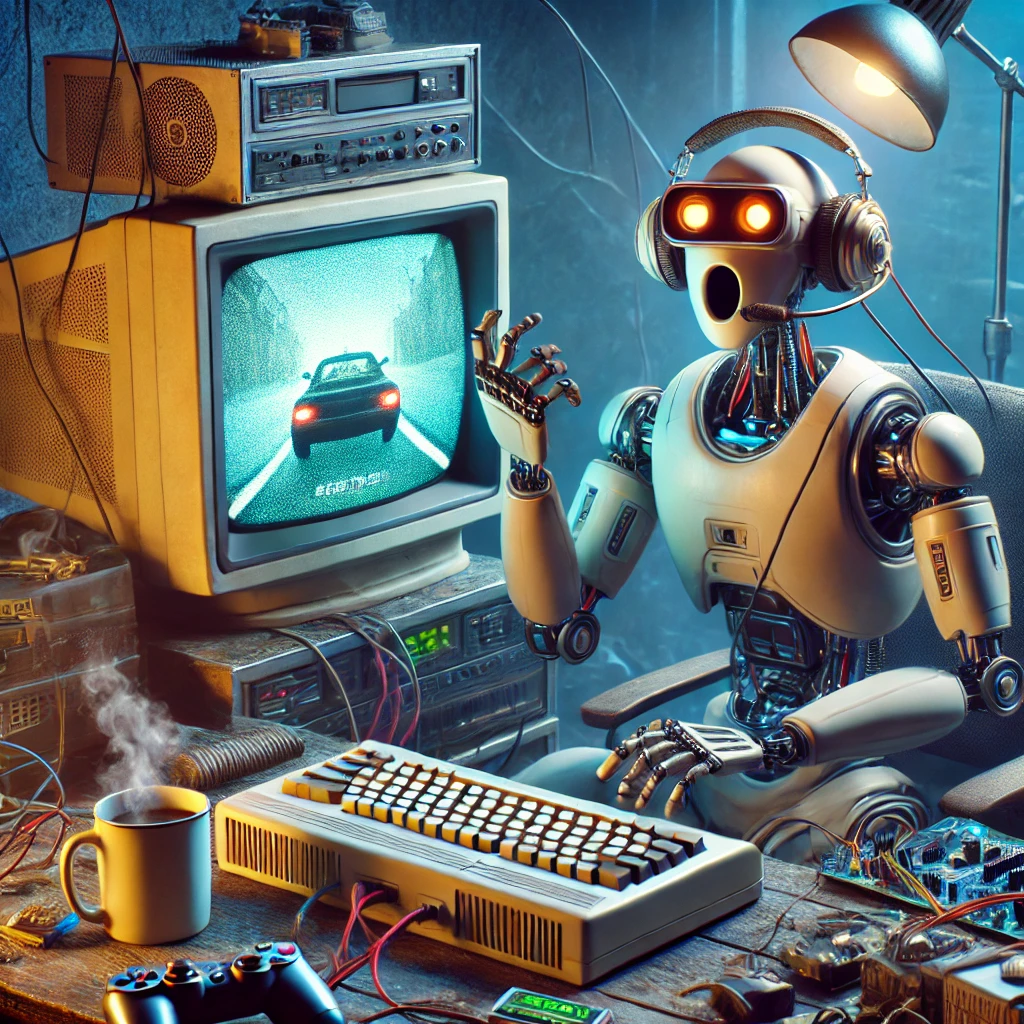

To appreciate the practical implications of this shift, consider the demonstration by popular YouTuber Bits Be Trippin, who successfully ran Deepseek not on data center-grade H100 GPUs, but on consumer-grade hardware. This isn’t just a technical curiosity—it represents a fundamental democratization of AI technology.

A setup like this can bring sophisticated AI applications within reach of small businesses, reducing both privacy concerns and dependency on cloud service providers. When organizations can run models locally on affordable hardware, they retain control over their data while still leveraging powerful AI capabilities.

The market has already begun to respond to these developments. In our decades of GPU retail experience, we’ve observed that prices for consumer GPUs typically don’t rise after the holidays, nor do prices for older models rise when newer models are released. Yet that’s exactly what’s happening now. This unusual market behavior suggests that Deepseek’s innovation has fundamentally altered the value proposition of consumer graphics cards by making them viable for AI workloads previously exclusive to specialized enterprise hardware.

The Technical Innovation Behind Deepseek

What makes Deepseek’s approach so revolutionary? Rather than simply scaling existing architectures to larger sizes, Deepseek employs innovative techniques that focus on model efficiency:

- Mixture of Experts (MoE) Architecture with Extreme Sparsity: Deepseek utilizes a remarkably sparse MoE approach, where only about 1/32 of the model is active at any time. This is significantly more efficient than previous MoE models like Mixtral, which typically activate 1/4 to 1/8 of their parameters. The sparsity of the MoE is 32:1… Only 1/32 of the model is active at any time.

- Transfer of Computational Load: Instead of doing all the heavy lifting during training, Deepseek’s approach shifts more computational work to inference time, when the model is actually being used.

- Optimization for Consumer Hardware: This architectural innovation enables the distribution of large models across multiple smaller VRAM cards.

- Improved Model Distillation: The techniques for compressing larger models into smaller, more efficient versions have significantly advanced, making consumer-grade hardware increasingly viable for running sophisticated AI systems.

These innovations allow powerful models to run on a fraction of the computational resources their predecessors require without sacrificing performance on key tasks.

Democratizing AI: The Broader Implications

The ability to run sophisticated AI models on accessible hardware has far-reaching implications:

- Reduced Barriers to Entry: Small businesses and startups can now implement custom AI solutions without massive infrastructure investments. Based on our analysis, gaming and mining cards are substantially more useful for AI applications than they were just a few months ago relative to the state of the art.

- Enhanced Privacy: Data can remain on-premises rather than being sent to cloud providers, addressing a major concern for sensitive information. This is particularly important for businesses handling sensitive customer data or proprietary information.

- Greater Customization: With lower resource requirements, organizations can afford to develop models tailored to their specific needs rather than relying on general-purpose solutions. This addresses the original problem that one-size-fits-all models are not what a business needs.

- Inference vs. Training Cost Distribution: While training frontier models still requires significant resources, running these models (inference) is becoming increasingly feasible on consumer hardware. From our perspective, no one will be training cutting-edge models on consumer GPUs anytime soon, but inferencing with those models is quite possible with the right architecture.

- Sustainability: More efficient models mean less energy consumption, reducing the environmental impact of AI deployment. The sparse activation approach utilized by Deepseek means that much less computational power is required to run sophisticated AI systems.

- Rapid Evolution: The pace of innovation in this space is accelerating dramatically. We’re witnessing capabilities that were impossible a month ago become possible today, highlighting the dynamic and fast-changing nature of AI development.

Looking Forward: A New Paradigm for AI Development

The Deepseek approach suggests a new direction for AI research and development. Rather than focusing exclusively on scaling up existing architectures, the field may benefit from increased attention to efficiency, sparsity, and techniques that distribute computational load more effectively.

For businesses considering AI adoption, this shift offers hope that they won’t need to choose between powerful capabilities and practical implementation. As more developers follow Deepseek’s lead in creating efficient, accessible models, we may see an explosion of innovative applications tailored to specific needs across industries.

This transformation has been partly driven by necessity. Chinese researchers, faced with export restrictions on advanced hardware, were forced to find more creative approaches to AI development—and they succeeded beyond most expectations. These constraints led to innovations that might otherwise have taken years to develop, accelerating the democratization of AI technology.

Looking ahead, we believe we may be entering an era where sophisticated AI workloads could be distributed across consumer hardware in ways reminiscent of early cryptocurrency mining. While not guaranteed, we wouldn’t rule out the possibility of people serving up inference tokens from garages and small data centers, similar to how cryptocurrency miners served up hashes during the crypto boom. The realm of possibilities has widened considerably.

The future of AI may not be about having the most computational power, but about using available resources most intelligently. In this new paradigm, smaller organizations won’t just be consumers of AI developed by tech giants—they’ll be active participants in shaping how AI is used to solve real-world problems.

As consumer-grade hardware becomes capable of running increasingly sophisticated models, we’re moving toward a world where AI is truly democratized—available to all who can benefit from it, not just those with massive computational resources at their disposal. The pace of change is breathtaking; what seems impossible today may be commonplace tomorrow.